Introduction

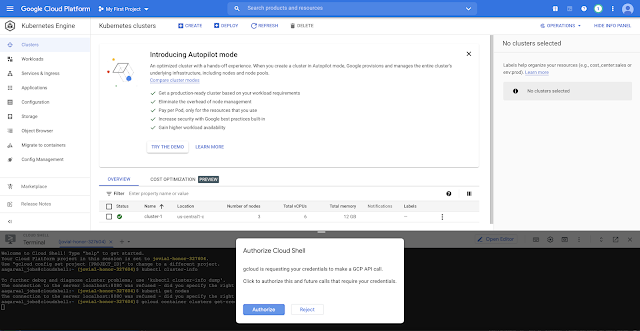

I will show how to create PersistentVolume and PersistentVolumeClaim on Kubernetes. I am using Minikube for our learning.

We will first create an index.html file for our Nginx pod and then use this file in our PersistentVolume and PersistentVolumeClaim for storage in our Pod.

Storage

You can login to Minikube using:

$ minikube ssh

Last login: Sun Oct 10 01:14:15 2021 from 192.168.49.1

docker@minikube:~$

We will now create index.html file at /mnt/data/index.html :

$ sudo mkdir /mnt/data

$ sudo sh -c "echo 'Hello from PVC example' > /mnt/data/index.html"

PersistentVolume

We can create a PersistentVolume using yaml:

apiVersion: v1

kind: PersistentVolume

metadata:

name: ashok-pv-vol

labels:

type: local

spec:

storageClassName: manual

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/mnt/data"Apply the PV:

$ kubectl apply -f pv.yaml

persistentvolume/ashok-pv-vol created

We can check the status of the PersistentVolume:

$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

ashok-pv-vol 20Gi RWO Retain Available manual 4s

PersistentVolumeClaim

We will create an PersistentVolumeClaim using yaml:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: ashok-pv-claim

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20GiPersistentVolumeClaim can be created as below:

$ kubectl apply -f pvc.yaml

persistentvolumeclaim/ashok-pv-claim created

We can check the status of the PersistentVolumeClaim:

$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

ashok-pv-claim Bound ashok-pv-vol 20Gi RWO manual 8s

The persistent volume status is now changed to bound :

$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

ashok-pv-vol 20Gi RWO Retain Bound default/ashok-pv-claim manual 2m31s

Let's create a pod for using the static volume.

Using PVC in Pod

We will create a pod using yaml:

apiVersion: v1

kind: Pod

metadata:

name: ashok-pv-pod

spec:

volumes:

- name: ashok-pv-storage

persistentVolumeClaim:

claimName: ashok-pv-claim

containers:

- name: ashok-pv-container

image: nginx

ports:

- containerPort: 80

name: "http-server"

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: ashok-pv-storage

Let's create pod:

$ kubectl apply -f pv-pod.yaml

pod/ashok-pv-pod created

We will check the status of the pod:

$ kubectl get po

NAME READY STATUS RESTARTS AGE

ashok-pv-pod 1/1 Running 0 79s

To make this pod accessible from browser, you can use kubectl port-forward :

$ kubectl port-forward nginx 8888:80

Forwarding from 127.0.0.1:8888 -> 80

Forwarding from [::1]:8888 -> 80

Open the browser http://localhost:8888/ :

Clean Up

We can delete the pod, pvc and pv using below commands:

$ kubectl delete po ashok-pv-pod

pod "ashok-pv-pod" deleted

$ kubectl delete pvc ashok-pv-claim

persistentvolumeclaim "ashok-pv-claim" deleted

$ kubectl delete pv ashok-pv-vol

persistentvolume "ashok-pv-vol" deleted

Let me know if you need any help.

Happy Coding !!!