Apache Sqoop

Sqoop is a tool for efficient data transfer between Hadoop (HDFS) to relational database. It use Map Reduce jobs to perform operations.

Sqoop Connector

This is a modular component to enable Map Reduce to perform import/export. It is a pluggable piece that fetches metadata(column, data Type, primary columns etc and map to Java equivalent) of transfer data to optimize it. The build-in sqoop connector, support most of the popular database and can support additional third party sqoop connectors. Therefore, there will be different connector to support MySQL or PostgreSQL or Oracle etc.

JDBC Driver

A JDBC driver is a software component enabling a Java application to interact with a database. To connect with individual databases, JDBC (the Java Database Connectivity API) requires drivers for each database. For example, MySQL has its own driver main class (com.mysql.jdbc.Driver).

Sqoop Connector (specific to database) use JDBC driver to connect to Database server.

Note: JDBC drivers are not shipped with Sqoop due to incompatible licenses and thus you must download and install one manually.

Prerequisites

- Hadoop is already installed with on standalone or cluster mode. If not, please configure it using article.

- Sqoop must be configured on machine. If not, please configure it using blog.

Sqoop Operations

In this tutorial, we will discuss about import data from MySQL using Sqoop.

Importing MySQL driver

Here, we will download the MySQL and place it in $SQOOP_HOME/lib

Download MySQL driver (JDBC Driver).

$wget http://central.maven.org/maven2/mysql/mysql-connector-java/5.1.41/mysql-connector-java-5.1.41.jar

Move the driver to lib folder

$mv mysql-connector-java-5.1.41.jar $SQOOP_HOME/lib

Note: If MySQL driver is not imported then "Could not load db driver class" error will occur.

Note: If MySQL driver is not imported then "Could not load db driver class" error will occur.

Import Data to HDFS

Create table in MySQL server and insert data as shown below

mysql>CREATE DATABASE hadoopguide;

mysql>use hadoopguide;mysql>CREATE TABLE product(id INT NOT NULL PRIMARY KEY AUTO_INCREMENT, product_name VARCHAR(64) NOT NULL, price DECIMAL(10,2), date DATE, version INT, design_comment VARCHAR(100));

mysql> INSERT INTO product VALUES(NULL,'sprocket',0.25,'2010-02-10',1,'Connects two gizmos');

mysql> INSERT INTO product VALUES(NULL,'gadget',99.99,'1983-08-13',13,'Our flagship product');

mysql> INSERT INTO product VALUES(NULL,'gizmo',4.00,'2009-11-30',4,NULL);

Ensure hadoop processes are running.

hduser@pooja:~$ jps

10016 SecondaryNameNode

9825 DataNode

10390 NodeManager

10250 ResourceManager

12650 Jps

9692 NameNode

If not, run script start-dfs.sh and start-yarn.sh

Run the import command

As we have only 3 rows, we will just run 1 mapper (by specifying -m 1). Note:Use mysql username and password that exists.

$sqoop import --connect jdbc:mysql://localhost:3306/hadoopguide --username sqoopuser -P --table product -m 1

17/09/05 19:03:47 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6As we have only 3 rows, we will just run 1 mapper (by specifying -m 1). Note:Use mysql username and password that exists.

$sqoop import --connect jdbc:mysql://localhost:3306/hadoopguide --username sqoopuser -P --table product -m 1

Enter password:

17/09/05 19:03:47 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

17/09/05 19:03:47 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

17/09/05 19:03:47 INFO tool.CodeGenTool: Beginning code generation

17/09/05 19:03:48 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `product` AS t LIMIT 1

17/09/05 19:03:48 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `product` AS t LIMIT 1

17/09/05 19:03:48 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /home/hduser/hadoop

Note: /tmp/sqoop-hduser/compile/0f2da5f5a28c59d094b9dfd9952dffee/product.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

17/09/05 19:03:57 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hduser/compile/0f2da5f5a28c59d094b9dfd9952dffee/product.jar

17/09/05 19:03:57 WARN manager.MySQLManager: It looks like you are importing from mysql.

17/09/05 19:03:57 WARN manager.MySQLManager: This transfer can be faster! Use the --direct

17/09/05 19:03:57 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

17/09/05 19:03:57 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

17/09/05 19:03:57 INFO mapreduce.ImportJobBase: Beginning import of product

17/09/05 19:03:57 INFO Configuration.deprecation: mapred.job.tracker is deprecated. Instead, use mapreduce.jobtracker.address

17/09/05 19:03:59 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

17/09/05 19:04:03 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

17/09/05 19:04:04 INFO client.RMProxy: Connecting to ResourceManager at master/192.168.1.101:8032

17/09/05 19:04:21 INFO db.DBInputFormat: Using read commited transaction isolation

17/09/05 19:04:21 INFO mapreduce.JobSubmitter: number of splits:1

17/09/05 19:04:22 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1504654311177_0002

17/09/05 19:04:25 INFO impl.YarnClientImpl: Submitted application application_1504654311177_0002

17/09/05 19:04:25 INFO mapreduce.Job: The url to track the job: http://master:8088/proxy/application_1504654311177_0002/

17/09/05 19:04:25 INFO mapreduce.Job: Running job: job_1504654311177_0002

17/09/05 19:04:58 INFO mapreduce.Job: Job job_1504654311177_0002 running in uber mode : false

17/09/05 19:04:58 INFO mapreduce.Job: map 0% reduce 0%

17/09/05 19:05:22 INFO mapreduce.Job: Task Id : attempt_1504654311177_0002_m_000000_0, Status : FAILED

17/09/05 19:05:37 INFO mapreduce.Job: Task Id : attempt_1504654311177_0002_m_000000_1, Status : FAILED

17/09/05 19:05:58 INFO mapreduce.Job: map 100% reduce 0%

17/09/05 19:05:59 INFO mapreduce.Job: Job job_1504654311177_0002 completed successfully

17/09/05 19:06:00 INFO mapreduce.Job: Counters: 31

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=155400

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=87

HDFS: Number of bytes written=130

HDFS: Number of read operations=4

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Failed map tasks=2

Launched map tasks=3

Other local map tasks=3

Total time spent by all maps in occupied slots (ms)=50839

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=50839

Total vcore-milliseconds taken by all map tasks=50839

Total megabyte-milliseconds taken by all map tasks=52059136

Map-Reduce Framework

Map input records=3

Map output records=3

Input split bytes=87

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=318

CPU time spent (ms)=2330

Physical memory (bytes) snapshot=174493696

Virtual memory (bytes) snapshot=1925107712

Total committed heap usage (bytes)=108527616

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=130

17/09/05 19:06:00 INFO mapreduce.ImportJobBase: Transferred 130 bytes in 116.295 seconds (1.1178 bytes/sec)

17/09/05 19:06:00 INFO mapreduce.ImportJobBase: Retrieved 3 records.

Note: The java code will be generated in same folder with table name (in our case product.java).

Verify data in HDFS

In HDFS, the folder will be created with table name (in our case product)

hduser@pooja:~$ hdfs dfs -ls /user/hduser/product/

Found 2 items

-rw-r--r-- 1 hduser supergroup 0 2017-09-05 19:05 /user/hduser/product/_SUCCESS

-rw-r--r-- 1 hduser supergroup 130 2017-09-05 19:05 /user/hduser/product/part-m-00000

hduser@pooja:~$ hdfs dfs -text /user/hduser/product/part-m-00000

17/09/05 19:13:19 INFO lzo.GPLNativeCodeLoader: Loaded native gpl library from the embedded binaries

17/09/05 19:13:19 INFO lzo.LzoCodec: Successfully loaded & initialized native-lzo library [hadoop-lzo rev f12b7f24913ffbde938b8d140e8a7b22183221a0]

1,sprocket,0.25,2010-02-10,1,Connects two gizmos

2,gadget,99.99,1983-08-13,13,Our flagship product

3,gizmo,4.00,2009-11-30,4,null

Import with file compression

Sqoop is capable of importing in few different file format such as TextFile (default one), Sequence files,Avro datafiles, and Parquet files.

We will import data in Avro format as shown below:

$ sqoop import --connect jdbc:mysql://localhost:3306/hadoopguide --username root -P --table product -m 1 --class-name ProductHolder --as-avrodatafile --target-dir product_avro_files1 --bindir .

17/09/05 21:04:55 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6

Enter password:

17/09/05 21:00:13 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

17/09/05 21:00:13 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

17/09/05 21:00:13 INFO tool.CodeGenTool: Beginning code generation

17/09/05 21:00:14 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `product` AS t LIMIT 1

17/09/05 21:00:14 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `product` AS t LIMIT 1

17/09/05 21:00:14 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /home/hduser/hadoop

Note: ./ProductHolder.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

17/09/05 21:00:22 INFO orm.CompilationManager: Writing jar file: ./ProductHolder.jar

17/09/05 21:00:24 WARN manager.MySQLManager: It looks like you are importing from mysql.

17/09/05 21:00:24 WARN manager.MySQLManager: This transfer can be faster! Use the --direct

17/09/05 21:00:24 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

17/09/05 21:00:24 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

17/09/05 21:00:24 INFO mapreduce.ImportJobBase: Beginning import of product

17/09/05 21:00:24 INFO Configuration.deprecation: mapred.job.tracker is deprecated. Instead, use mapreduce.jobtracker.address

17/09/05 21:00:25 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

17/09/05 21:00:30 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `product` AS t LIMIT 1

17/09/05 21:00:30 INFO mapreduce.DataDrivenImportJob: Writing Avro schema file: ./ProductHolder.avsc

17/09/05 21:00:31 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

17/09/05 21:00:31 INFO client.RMProxy: Connecting to ResourceManager at master/192.168.1.101:8032

17/09/05 21:00:45 INFO db.DBInputFormat: Using read commited transaction isolation

17/09/05 21:00:45 INFO mapreduce.JobSubmitter: number of splits:1

17/09/05 21:00:46 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1504654311177_0008

17/09/05 21:00:47 INFO impl.YarnClientImpl: Submitted application application_1504654311177_0008

17/09/05 21:00:47 INFO mapreduce.Job: The url to track the job: http://master:8088/proxy/application_1504654311177_0008/

17/09/05 21:00:47 INFO mapreduce.Job: Running job: job_1504654311177_0008

17/09/05 21:01:14 INFO mapreduce.Job: Job job_1504654311177_0008 running in uber mode : false

17/09/05 21:01:14 INFO mapreduce.Job: map 0% reduce 0%

17/09/05 21:01:35 INFO mapreduce.Job: map 100% reduce 0%

17/09/05 21:01:36 INFO mapreduce.Job: Job job_1504654311177_0008 completed successfully

17/09/05 21:01:37 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=155892

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=87

HDFS: Number of bytes written=856

HDFS: Number of read operations=4

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Other local map tasks=1

Total time spent by all maps in occupied slots (ms)=16596

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=16596

Total vcore-milliseconds taken by all map tasks=16596

Total megabyte-milliseconds taken by all map tasks=16994304

Map-Reduce Framework

Map input records=3

Map output records=3

Input split bytes=87

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=271

CPU time spent (ms)=4050

Physical memory (bytes) snapshot=198721536

Virtual memory (bytes) snapshot=1922297856

Total committed heap usage (bytes)=118489088

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=856

17/09/05 21:01:38 INFO mapreduce.ImportJobBase: Transferred 856 bytes in 66.6089 seconds (12.8511 bytes/sec)

17/09/05 21:01:38 INFO mapreduce.ImportJobBase: Retrieved 3 records.

Enter password:

17/09/05 21:00:13 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

17/09/05 21:00:13 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

17/09/05 21:00:13 INFO tool.CodeGenTool: Beginning code generation

17/09/05 21:00:14 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `product` AS t LIMIT 1

17/09/05 21:00:14 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `product` AS t LIMIT 1

17/09/05 21:00:14 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /home/hduser/hadoop

Note: ./ProductHolder.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

17/09/05 21:00:22 INFO orm.CompilationManager: Writing jar file: ./ProductHolder.jar

17/09/05 21:00:24 WARN manager.MySQLManager: It looks like you are importing from mysql.

17/09/05 21:00:24 WARN manager.MySQLManager: This transfer can be faster! Use the --direct

17/09/05 21:00:24 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

17/09/05 21:00:24 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

17/09/05 21:00:24 INFO mapreduce.ImportJobBase: Beginning import of product

17/09/05 21:00:24 INFO Configuration.deprecation: mapred.job.tracker is deprecated. Instead, use mapreduce.jobtracker.address

17/09/05 21:00:25 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

17/09/05 21:00:30 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `product` AS t LIMIT 1

17/09/05 21:00:30 INFO mapreduce.DataDrivenImportJob: Writing Avro schema file: ./ProductHolder.avsc

17/09/05 21:00:31 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

17/09/05 21:00:31 INFO client.RMProxy: Connecting to ResourceManager at master/192.168.1.101:8032

17/09/05 21:00:45 INFO db.DBInputFormat: Using read commited transaction isolation

17/09/05 21:00:45 INFO mapreduce.JobSubmitter: number of splits:1

17/09/05 21:00:46 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1504654311177_0008

17/09/05 21:00:47 INFO impl.YarnClientImpl: Submitted application application_1504654311177_0008

17/09/05 21:00:47 INFO mapreduce.Job: The url to track the job: http://master:8088/proxy/application_1504654311177_0008/

17/09/05 21:00:47 INFO mapreduce.Job: Running job: job_1504654311177_0008

17/09/05 21:01:14 INFO mapreduce.Job: Job job_1504654311177_0008 running in uber mode : false

17/09/05 21:01:14 INFO mapreduce.Job: map 0% reduce 0%

17/09/05 21:01:35 INFO mapreduce.Job: map 100% reduce 0%

17/09/05 21:01:36 INFO mapreduce.Job: Job job_1504654311177_0008 completed successfully

17/09/05 21:01:37 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=155892

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=87

HDFS: Number of bytes written=856

HDFS: Number of read operations=4

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Other local map tasks=1

Total time spent by all maps in occupied slots (ms)=16596

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=16596

Total vcore-milliseconds taken by all map tasks=16596

Total megabyte-milliseconds taken by all map tasks=16994304

Map-Reduce Framework

Map input records=3

Map output records=3

Input split bytes=87

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=271

CPU time spent (ms)=4050

Physical memory (bytes) snapshot=198721536

Virtual memory (bytes) snapshot=1922297856

Total committed heap usage (bytes)=118489088

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=856

17/09/05 21:01:38 INFO mapreduce.ImportJobBase: Transferred 856 bytes in 66.6089 seconds (12.8511 bytes/sec)

17/09/05 21:01:38 INFO mapreduce.ImportJobBase: Retrieved 3 records.

Verify avro file in HDFS

$ hdfs dfs -text /user/hduser/product_avro_files/part-m-00000.avro

{"id":{"int":1},"product_name":{"string":"sprocket"},"price":{"string":"0.25"},"date":{"long":1265788800000},"version":{"int":1},"design_comment":{"string":"Connects two gizmos"}}

{"id":{"int":2},"product_name":{"string":"gadget"},"price":{"string":"99.99"},"date":{"long":429606000000},"version":{"int":13},"design_comment":{"string":"Our flagship product"}}

{"id":{"int":3},"product_name":{"string":"gizmo"},"price":{"string":"4.00"},"date":{"long":1259568000000},"version":{"int":4},"design_comment":null}

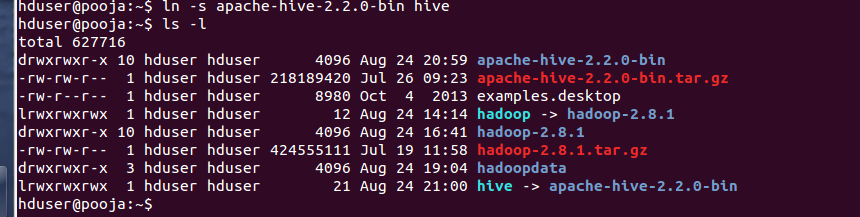

Importing Data in Hive

Make sure hive install on machine. If not, please refer to article.

$sqoop import --connect jdbc:mysql://localhost:3306/hadoopguide --username sqoopuser -P --table product -m 1 --hive-import

---Snippet$sqoop import --connect jdbc:mysql://localhost:3306/hadoopguide --username sqoopuser -P --table product -m 1 --hive-import

17/09/05 19:45:40 INFO mapreduce.Job: map 100% reduce 0%

17/09/05 19:45:42 INFO mapreduce.Job: Job job_1504654311177_0003 completed successfully

17/09/05 19:45:42 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=155400

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=87

HDFS: Number of bytes written=130

HDFS: Number of read operations=4

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Other local map tasks=1

Total time spent by all maps in occupied slots (ms)=12832

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=12832

Total vcore-milliseconds taken by all map tasks=12832

Total megabyte-milliseconds taken by all map tasks=13139968

Map-Reduce Framework

Map input records=3

Map output records=3

Input split bytes=87

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=219

CPU time spent (ms)=1770

Physical memory (bytes) snapshot=171204608

Virtual memory (bytes) snapshot=1924284416

Total committed heap usage (bytes)=110624768

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=130

17/09/05 19:45:42 INFO mapreduce.ImportJobBase: Transferred 130 bytes in 70.0458 seconds (1.8559 bytes/sec)

17/09/05 19:45:42 INFO mapreduce.ImportJobBase: Retrieved 3 records.

17/09/05 19:45:42 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `product` AS t LIMIT 1

17/09/05 19:45:42 WARN hive.TableDefWriter: Column price had to be cast to a less precise type in Hive

17/09/05 19:45:42 WARN hive.TableDefWriter: Column date had to be cast to a less precise type in Hive

17/09/05 19:45:43 INFO hive.HiveImport: Loading uploaded data into Hive

17/09/05 19:45:50 INFO hive.HiveImport: SLF4J: Class path contains multiple SLF4J bindings.

17/09/05 19:45:50 INFO hive.HiveImport: SLF4J: Found binding in [jar:file:/home/hduser/apache-hive-2.2.0-bin/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

17/09/05 19:45:50 INFO hive.HiveImport: SLF4J: Found binding in [jar:file:/home/hduser/hadoop-2.8.1/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

17/09/05 19:45:50 INFO hive.HiveImport: SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

17/09/05 19:45:50 INFO hive.HiveImport: SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

17/09/05 19:46:00 INFO hive.HiveImport:

17/09/05 19:46:00 INFO hive.HiveImport: Logging initialized using configuration in jar:file:/home/hduser/apache-hive-2.2.0-bin/lib/hive-common-2.2.0.jar!/hive-log4j2.properties Async: true

17/09/05 19:46:33 INFO hive.HiveImport: OK

17/09/05 19:46:33 INFO hive.HiveImport: Time taken: 6.267 seconds

17/09/05 19:46:36 INFO hive.HiveImport: Loading data to table default.product

17/09/05 19:46:39 INFO hive.HiveImport: OK

17/09/05 19:46:39 INFO hive.HiveImport: Time taken: 5.88 seconds

17/09/05 19:46:40 INFO hive.HiveImport: Hive import complete.

17/09/05 19:46:40 INFO hive.HiveImport: Export directory is contains the _SUCCESS file only, removing the directory.

Verify table in Hive

Hive managed table will be created with name of DB table (in our case it is product).

hive> select * from product;

OK

1 sprocket 0.25 2010-02-10 1 Connects two gizmos

2 gadget 99.99 1983-08-13 13 Our flagship product

3 gizmo 4.0 2009-11-30 4 null

Time taken: 10.406 seconds, Fetched: 3 row(s)

I hope you are able to able to successfully import data into HDFS. If still face issues, please write to me or refer to problem I faced.

Happy Coding!!!!

Problem Faced

If MySQL Driver not present in sqoop classpath, you will face below problem.

Solution: Refer to 'Importing MySQL driver' section above.

java.lang.RuntimeException: Could not load db driver class: com.mysql.jdbc.Driver

at org.apache.sqoop.manager.SqlManager.makeConnection(SqlManager.java:856)

at org.apache.sqoop.manager.GenericJdbcManager.getConnection(GenericJdbcManager.java:52)

at org.apache.sqoop.manager.SqlManager.execute(SqlManager.java:744)

at org.apache.sqoop.manager.SqlManager.execute(SqlManager.java:767)

at org.apache.sqoop.manager.SqlManager.getColumnInfoForRawQuery(SqlManager.java:270)

at org.apache.sqoop.manager.SqlManager.getColumnTypesForRawQuery(SqlManager.java:241)

at org.apache.sqoop.manager.SqlManager.getColumnTypes(SqlManager.java:227)

at org.apache.sqoop.manager.ConnManager.getColumnTypes(ConnManager.java:295)

at org.apache.sqoop.orm.ClassWriter.getColumnTypes(ClassWriter.java:1833)

at org.apache.sqoop.orm.ClassWriter.generate(ClassWriter.java:1645)

at org.apache.sqoop.tool.CodeGenTool.generateORM(CodeGenTool.java:107)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:478)

at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:605)

at org.apache.sqoop.Sqoop.run(Sqoop.java:143)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:179)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:218)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:227)

at org.apache.sqoop.Sqoop.main(Sqoop.java:236)

If Hadoop process not running, you will face below problem.

Solution: Start hadoop processes (start-dfs.sh, start-yarn.sh)

17/09/05 14:28:59 WARN ipc.Client: Failed to connect to server: master/192.168.1.101:9000: try once and fail.

java.net.ConnectException: Connection refused

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:531)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:495)

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:681)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:777)

at org.apache.hadoop.ipc.Client$Connection.access$3500(Client.java:409)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1542)

at org.apache.hadoop.ipc.Client.call(Client.java:1373)

at org.apache.hadoop.ipc.Client.call(Client.java:1337)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:227)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:116)

at com.sun.proxy.$Proxy10.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo(ClientNamenodeProtocolTranslatorPB.java:787)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:398)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:163)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:155)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:95)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:335)

at com.sun.proxy.$Proxy11.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient.getFileInfo(DFSClient.java:1700)

at org.apache.hadoop.hdfs.DistributedFileSystem$27.doCall(DistributedFileSystem.java:1436)

at org.apache.hadoop.hdfs.DistributedFileSystem$27.doCall(DistributedFileSystem.java:1433)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1433)

at org.apache.hadoop.fs.FileSystem.exists(FileSystem.java:1436)

at org.apache.hadoop.mapreduce.lib.output.FileOutputFormat.checkOutputSpecs(FileOutputFormat.java:145)

at org.apache.hadoop.mapreduce.JobSubmitter.checkSpecs(JobSubmitter.java:268)

at org.apache.hadoop.mapreduce.JobSubmitter.submitJobInternal(JobSubmitter.java:141)

at org.apache.hadoop.mapreduce.Job$11.run(Job.java:1341)

at org.apache.hadoop.mapreduce.Job$11.run(Job.java:1338)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1807)

at org.apache.hadoop.mapreduce.Job.submit(Job.java:1338)

at org.apache.hadoop.mapreduce.Job.waitForCompletion(Job.java:1359)

at org.apache.sqoop.mapreduce.ImportJobBase.doSubmitJob(ImportJobBase.java:196)

at org.apache.sqoop.mapreduce.ImportJobBase.runJob(ImportJobBase.java:169)

at org.apache.sqoop.mapreduce.ImportJobBase.runImport(ImportJobBase.java:266)

at org.apache.sqoop.manager.SqlManager.importTable(SqlManager.java:673)

at org.apache.sqoop.manager.MySQLManager.importTable(MySQLManager.java:118)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:497)

at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:605)

at org.apache.sqoop.Sqoop.run(Sqoop.java:143)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:179)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:218)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:227)

at org.apache.sqoop.Sqoop.main(Sqoop.java:236)

Solution: Refer to 'Importing MySQL driver' section above.

java.lang.RuntimeException: Could not load db driver class: com.mysql.jdbc.Driver

at org.apache.sqoop.manager.SqlManager.makeConnection(SqlManager.java:856)

at org.apache.sqoop.manager.GenericJdbcManager.getConnection(GenericJdbcManager.java:52)

at org.apache.sqoop.manager.SqlManager.execute(SqlManager.java:744)

at org.apache.sqoop.manager.SqlManager.execute(SqlManager.java:767)

at org.apache.sqoop.manager.SqlManager.getColumnInfoForRawQuery(SqlManager.java:270)

at org.apache.sqoop.manager.SqlManager.getColumnTypesForRawQuery(SqlManager.java:241)

at org.apache.sqoop.manager.SqlManager.getColumnTypes(SqlManager.java:227)

at org.apache.sqoop.manager.ConnManager.getColumnTypes(ConnManager.java:295)

at org.apache.sqoop.orm.ClassWriter.getColumnTypes(ClassWriter.java:1833)

at org.apache.sqoop.orm.ClassWriter.generate(ClassWriter.java:1645)

at org.apache.sqoop.tool.CodeGenTool.generateORM(CodeGenTool.java:107)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:478)

at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:605)

at org.apache.sqoop.Sqoop.run(Sqoop.java:143)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:179)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:218)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:227)

at org.apache.sqoop.Sqoop.main(Sqoop.java:236)

If Hadoop process not running, you will face below problem.

Solution: Start hadoop processes (start-dfs.sh, start-yarn.sh)

17/09/05 14:28:59 WARN ipc.Client: Failed to connect to server: master/192.168.1.101:9000: try once and fail.

java.net.ConnectException: Connection refused

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:531)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:495)

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:681)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:777)

at org.apache.hadoop.ipc.Client$Connection.access$3500(Client.java:409)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1542)

at org.apache.hadoop.ipc.Client.call(Client.java:1373)

at org.apache.hadoop.ipc.Client.call(Client.java:1337)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:227)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:116)

at com.sun.proxy.$Proxy10.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo(ClientNamenodeProtocolTranslatorPB.java:787)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:398)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:163)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:155)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:95)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:335)

at com.sun.proxy.$Proxy11.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient.getFileInfo(DFSClient.java:1700)

at org.apache.hadoop.hdfs.DistributedFileSystem$27.doCall(DistributedFileSystem.java:1436)

at org.apache.hadoop.hdfs.DistributedFileSystem$27.doCall(DistributedFileSystem.java:1433)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1433)

at org.apache.hadoop.fs.FileSystem.exists(FileSystem.java:1436)

at org.apache.hadoop.mapreduce.lib.output.FileOutputFormat.checkOutputSpecs(FileOutputFormat.java:145)

at org.apache.hadoop.mapreduce.JobSubmitter.checkSpecs(JobSubmitter.java:268)

at org.apache.hadoop.mapreduce.JobSubmitter.submitJobInternal(JobSubmitter.java:141)

at org.apache.hadoop.mapreduce.Job$11.run(Job.java:1341)

at org.apache.hadoop.mapreduce.Job$11.run(Job.java:1338)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1807)

at org.apache.hadoop.mapreduce.Job.submit(Job.java:1338)

at org.apache.hadoop.mapreduce.Job.waitForCompletion(Job.java:1359)

at org.apache.sqoop.mapreduce.ImportJobBase.doSubmitJob(ImportJobBase.java:196)

at org.apache.sqoop.mapreduce.ImportJobBase.runJob(ImportJobBase.java:169)

at org.apache.sqoop.mapreduce.ImportJobBase.runImport(ImportJobBase.java:266)

at org.apache.sqoop.manager.SqlManager.importTable(SqlManager.java:673)

at org.apache.sqoop.manager.MySQLManager.importTable(MySQLManager.java:118)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:497)

at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:605)

at org.apache.sqoop.Sqoop.run(Sqoop.java:143)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:179)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:218)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:227)

at org.apache.sqoop.Sqoop.main(Sqoop.java:236)